Part 1: Geostrophic balance¶

Andrew Delman, updated 2023-12-22.

Objectives¶

To use ECCO state estimate output to illustrate the concept of geostrophic balance; where it does and doesn’t explain oceanic flows well.

By the end of the tutorial, you will be able to:

Download ECCO fields and query their attributes using

xarrayPlot ECCO fields on a single tile

Carry out spatial differencing and interpolation on the ECCO native model grid

Compare the two sides of the geostrophic balance equations

Compute geostrophic velocities

Apply masks in 2-D spatial plots

Use the

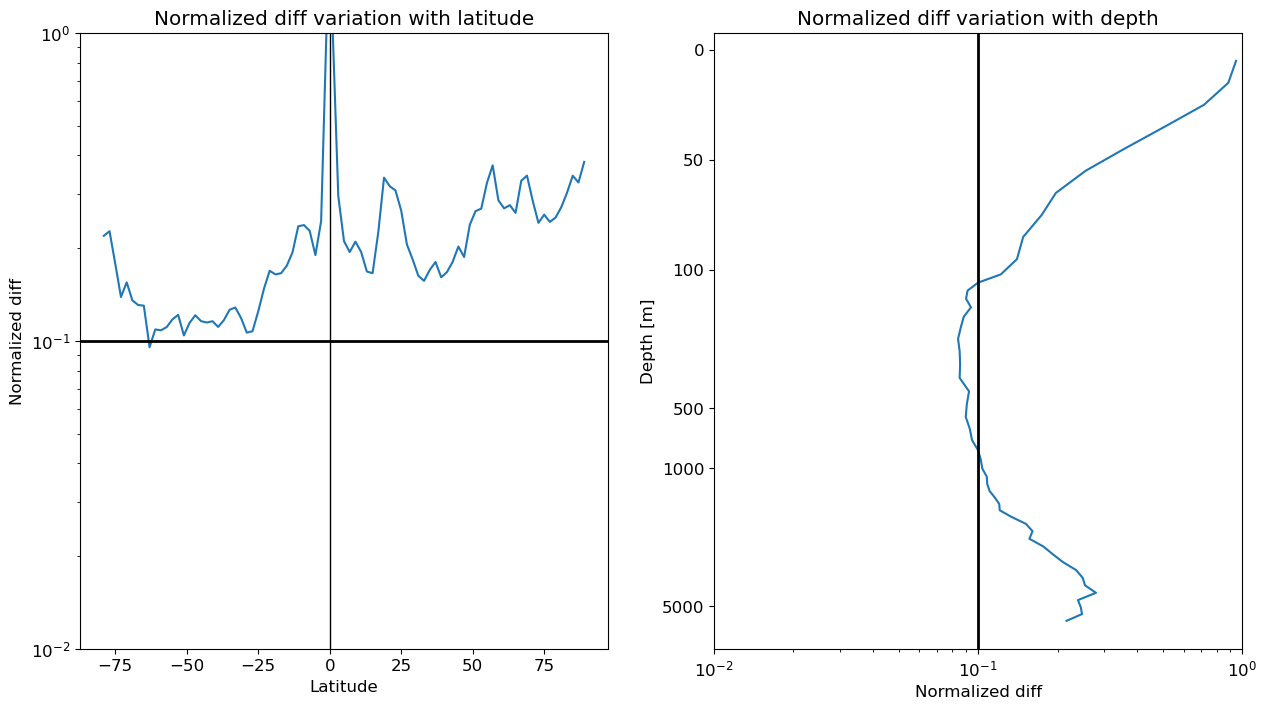

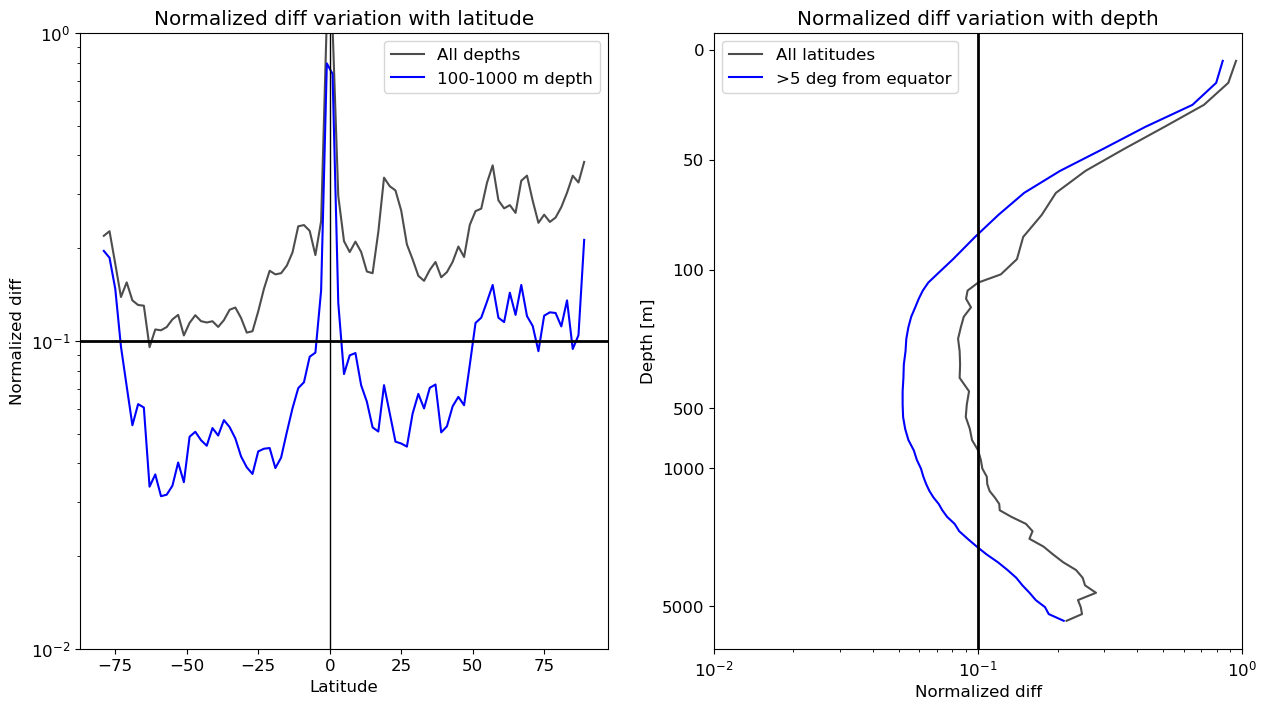

ecco_v4_pypackage to plot global maps of ECCO fieldsUse a statistical measure (normalized difference) to assess the latitude and depth dependence of geostrophic balance

Introduction¶

Conservation of momentum¶

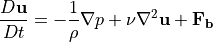

Geostrophic balance originates from the conservation of momentum, as expressed by the momentum equation:

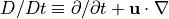

following roughly the notation used in Kundu and Cohen (2008) and Vallis (2006). This equation is a simplification of the Navier-Stokes equation for an incompressible fluid. The material derivative  is the derivative in time following a fluid parcel. The symbol

is the derivative in time following a fluid parcel. The symbol  indicates the kinematic viscosity, and

indicates the kinematic viscosity, and  represents body forces (real or apparent) exerted on the fluid.

represents body forces (real or apparent) exerted on the fluid.

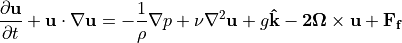

The equation above applies to any incompressible fluid, even the water in a fish tank or swimming pool. However, the body forces  are dependent upon the environment of the fluid and the scales being considered. In a physical oceanography context, three forces that are typically very important are (a) gravity, (b) the Coriolis force from rotation of the earth, and (c) friction from surface winds or topography. So the equation above can be rewritten as

are dependent upon the environment of the fluid and the scales being considered. In a physical oceanography context, three forces that are typically very important are (a) gravity, (b) the Coriolis force from rotation of the earth, and (c) friction from surface winds or topography. So the equation above can be rewritten as

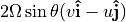

Of those last three terms: 1. Gravity  effectively only applies to vertical momentum, and can be neglected in the horizontal momentum equations 1. The Coriolis force

effectively only applies to vertical momentum, and can be neglected in the horizontal momentum equations 1. The Coriolis force  can be approximated by its vertical component,

can be approximated by its vertical component,  where

where  is the rotation rate of Earth in radians and

is the rotation rate of Earth in radians and  is latitude 1. Friction

is latitude 1. Friction  from wind and topography is negligible in

the ocean interior.

from wind and topography is negligible in

the ocean interior.

To simplify,  is defined as

is defined as  . So using subscript notation for derivatives (

. So using subscript notation for derivatives ( is the derivative of

is the derivative of  with respect to

with respect to  ) the two horizontal components of momentum conservation are:

) the two horizontal components of momentum conservation are:

Geostrophic balance¶

The two horizontal momentum equations still have a number of terms, but in the global oceans most of the flow is explained by a balance between just two terms. In steady state (or for very slowly-varying ocean features) the time derivatives  ,

,  are negligible, and at the large scales of major ocean currents viscosity is relatively small as well (inviscid approximation). This leaves three terms. The 2nd term on the left-hand side is usually negligible at large scales as well

(we’ll return to this later), so large-scale ocean flows generally follow geostrophic balance:

are negligible, and at the large scales of major ocean currents viscosity is relatively small as well (inviscid approximation). This leaves three terms. The 2nd term on the left-hand side is usually negligible at large scales as well

(we’ll return to this later), so large-scale ocean flows generally follow geostrophic balance:

(cf. Vallis ch. 2.8, Kundu and Cohen ch. 14.5, Gill ch. 7.6)

If you’ve looked at weather maps that show the clockwise or counter-clockwise flow of winds around areas of high or low pressure, you’ve encountered geostrophic balance in the atmosphere. But what does it look like in the ocean? You’re about to see it in action…using output from the ECCO state estimate.

Download the ECCO output¶

If you haven’t been through the Using Python to Download ECCO Datasets tutorial yet, I recommend going through it before proceeding further with this tutorial. If you’ve done that tutorial, you can save the ecco_download.py file on your local machine and use it to get the output we need to assess geostrophic balance.

What fields will we need? Look at the geostrophic balance equations. On the left-hand side, the Coriolis parameter  is a function of latitude which we can compute, so we only need to download horizontal velocities

is a function of latitude which we can compute, so we only need to download horizontal velocities  and

and  . On the right-hand side we need density

. On the right-hand side we need density  and pressure

and pressure  , as well as the grid parameters that allow us to compute spatial derivatives.

, as well as the grid parameters that allow us to compute spatial derivatives.

Here are the lists of variables contained in ECCOv4r4 datasets on PO.DAAC:

ECCO v4r4 llc90 Grid Dataset Variables - Monthly Means

ECCO v4r4 llc90 Grid Dataset Variables - Daily Means

ECCO v4r4 llc90 Grid Dataset Variables - Daily Snapshots

ECCO v4r4 0.5-Deg Interp Grid Dataset Variables - Monthly Means

ECCO v4r4 0.5-Deg Interp Grid Dataset Variables - Daily Means

ECCO v4r4 Time Series and Grid Parameters

Let’s have a look at the llc90 grid monthly means variable list. A text search for “velocity” shows us that UVEL and VVEL are the horizontal velocity variables we need. Importantly, we need to know the ShortName of the datasets containing these variables, which is ECCO_L4_OCEAN_VEL_LLC0090GRID_MONTHLY_V4R4.

Searching for “density” and we get RHOAnoma (in-situ seawater density anomaly), and “pressure” gives us PHIHYD and PHIHYDcR (ocean hydrostatic pressure anomaly)–in this analysis we specifically need to use PHIHYDcR, the pressure anomaly at constant depth. These fields are all found in datasets with ShortName ECCO_L4_DENS_STRAT_PRESS_LLC0090GRID_MONTHLY_V4R4.

Now that the datasets we need have been identified, let’s download them for the month of January 2000.

Tip: do you find yourself having to say “PO.DAAC”, and wondering if you have to spell out all of those letters? Please don’t…spare yourself! It’s pronounced Poh-dack, and rhymes with “low stack”, as in the amount of homework you can only hope for as a 1st year PO grad student. :-o

[1]:

# # only need this if ecco_download.py is in a different directory than the tutorial notebook

# import sys

# sys.path.append('/home/user/some_other_directory')

# load module into workspace

from ecco_download import ecco_podaac_download

# query to see the syntax needed for the download function, using help(), or put ? after the function name

help(ecco_podaac_download)

Help on function ecco_podaac_download in module ecco_download:

ecco_podaac_download(ShortName, StartDate, EndDate, download_root_dir=None, n_workers=6, force_redownload=False)

This routine downloads ECCO datasets from PO.DAAC. It is adapted from the Jupyter notebooks

created by Jack McNelis and Ian Fenty (https://github.com/ECCO-GROUP/ECCO-ACCESS/blob/master/PODAAC/Downloading_ECCO_datasets_from_PODAAC/README.md)

and modified by Andrew Delman (https://ecco-v4-python-tutorial.readthedocs.io).

Parameters

----------

ShortName: str, the ShortName that identifies the dataset on PO.DAAC.

StartDate,EndDate: str, in 'YYYY', 'YYYY-MM', or 'YYYY-MM-DD' format,

define date range [StartDate,EndDate] for download.

EndDate is included in the time range (unlike typical Python ranges).

ECCOv4r4 date range is '1992-01-01' to '2017-12-31'.

For 'SNAPSHOT' datasets, an additional day is added to EndDate to enable closed budgets

within the specified date range.

n_workers: int, number of workers to use in concurrent downloads.

force_redownload: bool, if True, existing files will be redownloaded and replaced;

if False, existing files will not be replaced.

[2]:

# download file (granule) containing Jan 2000 velocities,

# to default path ~/Downloads/ECCO_V4r4_PODAAC/

vel_monthly_shortname = "ECCO_L4_OCEAN_VEL_LLC0090GRID_MONTHLY_V4R4"

ecco_podaac_download(ShortName=vel_monthly_shortname,\

StartDate="2000-01-01",EndDate="2000-01-31",download_root_dir=None,\

n_workers=6,force_redownload=False)

created download directory C:\Users\adelman\Downloads\ECCO_V4r4_PODAAC\ECCO_L4_OCEAN_VEL_LLC0090GRID_MONTHLY_V4R4

Total number of matching granules: 1

DL Progress: 100%|###########################| 1/1 [00:06<00:00, 6.01s/it]

=====================================

total downloaded: 30.6 Mb

avg download speed: 5.07 Mb/s

Time spent = 6.032305955886841 seconds

[3]:

# download file (granule) containing Jan 2000 density/pressure anomalies,

# to default path ~/Downloads/ECCO_V4r4_PODAAC/

denspress_monthly_shortname = "ECCO_L4_DENS_STRAT_PRESS_LLC0090GRID_MONTHLY_V4R4"

ecco_podaac_download(ShortName=denspress_monthly_shortname,\

StartDate="2000-01-01",EndDate="2000-01-31",download_root_dir=None,\

n_workers=6,force_redownload=False)

created download directory C:\Users\adelman\Downloads\ECCO_V4r4_PODAAC\ECCO_L4_DENS_STRAT_PRESS_LLC0090GRID_MONTHLY_V4R4

Total number of matching granules: 1

DL Progress: 100%|###########################| 1/1 [00:07<00:00, 7.11s/it]

=====================================

total downloaded: 30.98 Mb

avg download speed: 4.36 Mb/s

Time spent = 7.113083362579346 seconds

Notice that the StartDate for each of the downloads was set to the 2nd of the month (2000-01-02) not the 1st. If it is set to the 1st, then the file for Dec 1999 will also be downloaded (which ends on 2000-01-01).

Once you have downloaded the monthly files for velocities and density/pressure, let’s also download daily files for each (2000-01-01). Consulting the “Daily Means” list of variables, the ShortNames of the datasets needed are very similar; MONTHLY is just replaced with DAILY.

[4]:

# download file (granule) containing 01 Jan 2000 velocities,

# to default path ~/Downloads/ECCO_V4r4_PODAAC/

vel_daily_shortname = "ECCO_L4_OCEAN_VEL_LLC0090GRID_DAILY_V4R4"

ecco_podaac_download(ShortName=vel_daily_shortname,\

StartDate="2000-01-01",EndDate="2000-01-01",download_root_dir=None,\

n_workers=6,force_redownload=False)

# download file (granule) containing 01 Jan 2000 density/pressure anomalies,

# to default path ~/Downloads/ECCO_V4r4_PODAAC/

denspress_daily_shortname = "ECCO_L4_DENS_STRAT_PRESS_LLC0090GRID_DAILY_V4R4"

ecco_podaac_download(ShortName=denspress_daily_shortname,\

StartDate="2000-01-01",EndDate="2000-01-01",download_root_dir=None,\

n_workers=6,force_redownload=False)

created download directory C:\Users\adelman\Downloads\ECCO_V4r4_PODAAC\ECCO_L4_OCEAN_VEL_LLC0090GRID_DAILY_V4R4

Total number of matching granules: 1

DL Progress: 100%|###########################| 1/1 [00:06<00:00, 6.58s/it]

=====================================

total downloaded: 30.68 Mb

avg download speed: 4.65 Mb/s

Time spent = 6.5920562744140625 seconds

created download directory C:\Users\adelman\Downloads\ECCO_V4r4_PODAAC\ECCO_L4_DENS_STRAT_PRESS_LLC0090GRID_DAILY_V4R4

Total number of matching granules: 1

DL Progress: 100%|###########################| 1/1 [00:04<00:00, 4.97s/it]

=====================================

total downloaded: 31.2 Mb

avg download speed: 6.27 Mb/s

Time spent = 4.976189613342285 seconds

Last but not least, we will need certain parameters from the model grid in order to compute gradients (derivatives with respect to  and

and  ). These are in a dataset with ShortName ECCO_L4_GEOMETRY_LLC0090GRID_V4R4; they have no time dimension but setting

). These are in a dataset with ShortName ECCO_L4_GEOMETRY_LLC0090GRID_V4R4; they have no time dimension but setting StartDate and EndDate for any date between 1992-01-01 and 2018-01-01 should download it.

[5]:

grid_params_shortname = "ECCO_L4_GEOMETRY_LLC0090GRID_V4R4"

ecco_podaac_download(ShortName=grid_params_shortname,\

StartDate="2000-01-01",EndDate="2000-01-01",download_root_dir=None,\

n_workers=6,force_redownload=False)

created download directory C:\Users\adelman\Downloads\ECCO_V4r4_PODAAC\ECCO_L4_GEOMETRY_LLC0090GRID_V4R4

Total number of matching granules: 1

DL Progress: 100%|###########################| 1/1 [00:03<00:00, 3.74s/it]

=====================================

total downloaded: 8.57 Mb

avg download speed: 2.29 Mb/s

Time spent = 3.7480597496032715 seconds

Now check the directory where the ECCO output was downloaded to, this will be under ~/Downloads/ECCO_V4r4_PODAAC/ unless you changed the path under the download_root_dir option. There should be (at least) 5 subfolders corresponding to 2 datasets each of monthly and daily means, as well as a folder for the grid parameters. The monthly mean folders contain 1 file each for Jan 2000, the daily mean folders contain 2 files each (1999-12-31 and 2000-01-01).

Open/view ECCO files¶

View and plot density/pressure anomalies on a single “tile”¶

Now let’s load one of our datasets and create a couple plots. First we’ll load some Python packages that will help us read and plot the data, then we’ll load the monthly density/stratification/pressure dataset into our workspace.

Tip: If you have any errors involving reading the netCDF file in the code below, try installing the

netCDF4orh5netcdfpackages, using pip install {pkgname} or conda install -c conda-forge {pkgname}.

[6]:

import numpy as np

import xarray as xr

import xmitgcm

import xgcm

import glob

from os.path import expanduser,join

import sys

user_home_dir = expanduser('~')

sys.path.append(join(user_home_dir,'ECCOv4-py'))

import ecco_v4_py as ecco

import matplotlib.pyplot as plt

# locate files to load

download_root_dir = join(user_home_dir,'Downloads','ECCO_V4r4_PODAAC')

download_dir = join(download_root_dir,denspress_monthly_shortname)

curr_denspress_files = list(glob.glob(join(download_dir,'*nc')))

print(f'number of files to load: {len(curr_denspress_files)}')

# load file into workspace

# (in this case only 1 file, but this function can load multiple netCDF files with compatible dimensions)

ds_denspress_mo = xr.open_mfdataset(curr_denspress_files, parallel=True, data_vars='minimal',\

coords='minimal', compat='override')

number of files to load: 1

We have just loaded the contents of the netCDF file into the workspace using the package xarray. We can look at a summary of the contents of this xarray DataSet:

[7]:

ds_denspress_mo

[7]:

<xarray.Dataset>

Dimensions: (i: 90, i_g: 90, j: 90, j_g: 90, k: 50, k_u: 50, k_l: 50, k_p1: 51, tile: 13, time: 1, nv: 2, nb: 4)

Coordinates: (12/22)

* i (i) int32 0 1 2 3 4 5 6 7 8 9 ... 80 81 82 83 84 85 86 87 88 89

* i_g (i_g) int32 0 1 2 3 4 5 6 7 8 9 ... 80 81 82 83 84 85 86 87 88 89

* j (j) int32 0 1 2 3 4 5 6 7 8 9 ... 80 81 82 83 84 85 86 87 88 89

* j_g (j_g) int32 0 1 2 3 4 5 6 7 8 9 ... 80 81 82 83 84 85 86 87 88 89

* k (k) int32 0 1 2 3 4 5 6 7 8 9 ... 40 41 42 43 44 45 46 47 48 49

* k_u (k_u) int32 0 1 2 3 4 5 6 7 8 9 ... 40 41 42 43 44 45 46 47 48 49

... ...

Zu (k_u) float32 dask.array<chunksize=(50,), meta=np.ndarray>

Zl (k_l) float32 dask.array<chunksize=(50,), meta=np.ndarray>

time_bnds (time, nv) datetime64[ns] dask.array<chunksize=(1, 2), meta=np.ndarray>

XC_bnds (tile, j, i, nb) float32 dask.array<chunksize=(13, 90, 90, 4), meta=np.ndarray>

YC_bnds (tile, j, i, nb) float32 dask.array<chunksize=(13, 90, 90, 4), meta=np.ndarray>

Z_bnds (k, nv) float32 dask.array<chunksize=(50, 2), meta=np.ndarray>

Dimensions without coordinates: nv, nb

Data variables:

RHOAnoma (time, k, tile, j, i) float32 dask.array<chunksize=(1, 50, 13, 90, 90), meta=np.ndarray>

DRHODR (time, k_l, tile, j, i) float32 dask.array<chunksize=(1, 50, 13, 90, 90), meta=np.ndarray>

PHIHYD (time, k, tile, j, i) float32 dask.array<chunksize=(1, 50, 13, 90, 90), meta=np.ndarray>

PHIHYDcR (time, k, tile, j, i) float32 dask.array<chunksize=(1, 50, 13, 90, 90), meta=np.ndarray>

Attributes: (12/62)

acknowledgement: This research was carried out by the Jet...

author: Ian Fenty and Ou Wang

cdm_data_type: Grid

comment: Fields provided on the curvilinear lat-l...

Conventions: CF-1.8, ACDD-1.3

coordinates_comment: Note: the global 'coordinates' attribute...

... ...

time_coverage_duration: P1M

time_coverage_end: 2000-02-01T00:00:00

time_coverage_resolution: P1M

time_coverage_start: 2000-01-01T00:00:00

title: ECCO Ocean Density, Stratification, and ...

uuid: 166a1992-4182-11eb-82f9-0cc47a3f43f9- i: 90

- i_g: 90

- j: 90

- j_g: 90

- k: 50

- k_u: 50

- k_l: 50

- k_p1: 51

- tile: 13

- time: 1

- nv: 2

- nb: 4

- i(i)int320 1 2 3 4 5 6 ... 84 85 86 87 88 89

- axis :

- X

- long_name :

- grid index in x for variables at tracer and 'v' locations

- swap_dim :

- XC

- comment :

- In the Arakawa C-grid system, tracer (e.g., THETA) and 'v' variables (e.g., VVEL) have the same x coordinate on the model grid.

- coverage_content_type :

- coordinate

array([ 0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31, 32, 33, 34, 35, 36, 37, 38, 39, 40, 41, 42, 43, 44, 45, 46, 47, 48, 49, 50, 51, 52, 53, 54, 55, 56, 57, 58, 59, 60, 61, 62, 63, 64, 65, 66, 67, 68, 69, 70, 71, 72, 73, 74, 75, 76, 77, 78, 79, 80, 81, 82, 83, 84, 85, 86, 87, 88, 89]) - i_g(i_g)int320 1 2 3 4 5 6 ... 84 85 86 87 88 89

- axis :

- X

- long_name :

- grid index in x for variables at 'u' and 'g' locations

- c_grid_axis_shift :

- -0.5

- swap_dim :

- XG

- comment :

- In the Arakawa C-grid system, 'u' (e.g., UVEL) and 'g' variables (e.g., XG) have the same x coordinate on the model grid.

- coverage_content_type :

- coordinate

array([ 0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31, 32, 33, 34, 35, 36, 37, 38, 39, 40, 41, 42, 43, 44, 45, 46, 47, 48, 49, 50, 51, 52, 53, 54, 55, 56, 57, 58, 59, 60, 61, 62, 63, 64, 65, 66, 67, 68, 69, 70, 71, 72, 73, 74, 75, 76, 77, 78, 79, 80, 81, 82, 83, 84, 85, 86, 87, 88, 89]) - j(j)int320 1 2 3 4 5 6 ... 84 85 86 87 88 89

- axis :

- Y

- long_name :

- grid index in y for variables at tracer and 'u' locations

- swap_dim :

- YC

- comment :

- In the Arakawa C-grid system, tracer (e.g., THETA) and 'u' variables (e.g., UVEL) have the same y coordinate on the model grid.

- coverage_content_type :

- coordinate

array([ 0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31, 32, 33, 34, 35, 36, 37, 38, 39, 40, 41, 42, 43, 44, 45, 46, 47, 48, 49, 50, 51, 52, 53, 54, 55, 56, 57, 58, 59, 60, 61, 62, 63, 64, 65, 66, 67, 68, 69, 70, 71, 72, 73, 74, 75, 76, 77, 78, 79, 80, 81, 82, 83, 84, 85, 86, 87, 88, 89]) - j_g(j_g)int320 1 2 3 4 5 6 ... 84 85 86 87 88 89

- axis :

- Y

- long_name :

- grid index in y for variables at 'v' and 'g' locations

- c_grid_axis_shift :

- -0.5

- swap_dim :

- YG

- comment :

- In the Arakawa C-grid system, 'v' (e.g., VVEL) and 'g' variables (e.g., XG) have the same y coordinate.

- coverage_content_type :

- coordinate

array([ 0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31, 32, 33, 34, 35, 36, 37, 38, 39, 40, 41, 42, 43, 44, 45, 46, 47, 48, 49, 50, 51, 52, 53, 54, 55, 56, 57, 58, 59, 60, 61, 62, 63, 64, 65, 66, 67, 68, 69, 70, 71, 72, 73, 74, 75, 76, 77, 78, 79, 80, 81, 82, 83, 84, 85, 86, 87, 88, 89]) - k(k)int320 1 2 3 4 5 6 ... 44 45 46 47 48 49

- axis :

- Z

- long_name :

- grid index in z for tracer variables

- swap_dim :

- Z

- coverage_content_type :

- coordinate

array([ 0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31, 32, 33, 34, 35, 36, 37, 38, 39, 40, 41, 42, 43, 44, 45, 46, 47, 48, 49]) - k_u(k_u)int320 1 2 3 4 5 6 ... 44 45 46 47 48 49

- axis :

- Z

- c_grid_axis_shift :

- 0.5

- swap_dim :

- Zu

- coverage_content_type :

- coordinate

- long_name :

- grid index in z corresponding to the bottom face of tracer grid cells ('w' locations)

- comment :

- First index corresponds to the bottom surface of the uppermost tracer grid cell. The use of 'u' in the variable name follows the MITgcm convention for ocean variables in which the upper (u) face of a tracer grid cell on the logical grid corresponds to the bottom face of the grid cell on the physical grid.

array([ 0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31, 32, 33, 34, 35, 36, 37, 38, 39, 40, 41, 42, 43, 44, 45, 46, 47, 48, 49]) - k_l(k_l)int320 1 2 3 4 5 6 ... 44 45 46 47 48 49

- axis :

- Z

- c_grid_axis_shift :

- -0.5

- swap_dim :

- Zl

- coverage_content_type :

- coordinate

- long_name :

- grid index in z corresponding to the top face of tracer grid cells ('w' locations)

- comment :

- First index corresponds to the top surface of the uppermost tracer grid cell. The use of 'l' in the variable name follows the MITgcm convention for ocean variables in which the lower (l) face of a tracer grid cell on the logical grid corresponds to the top face of the grid cell on the physical grid.

array([ 0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31, 32, 33, 34, 35, 36, 37, 38, 39, 40, 41, 42, 43, 44, 45, 46, 47, 48, 49]) - k_p1(k_p1)int320 1 2 3 4 5 6 ... 45 46 47 48 49 50

- axis :

- Z

- long_name :

- grid index in z for variables at 'w' locations

- c_grid_axis_shift :

- [-0.5 0.5]

- swap_dim :

- Zp1

- comment :

- Includes top of uppermost model tracer cell (k_p1=0) and bottom of lowermost tracer cell (k_p1=51).

- coverage_content_type :

- coordinate

array([ 0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31, 32, 33, 34, 35, 36, 37, 38, 39, 40, 41, 42, 43, 44, 45, 46, 47, 48, 49, 50]) - tile(tile)int320 1 2 3 4 5 6 7 8 9 10 11 12

- long_name :

- lat-lon-cap tile index

- comment :

- The ECCO V4 horizontal model grid is divided into 13 tiles of 90x90 cells for convenience.

- coverage_content_type :

- coordinate

array([ 0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12])

- time(time)datetime64[ns]2000-01-16T12:00:00

- long_name :

- center time of averaging period

- axis :

- T

- bounds :

- time_bnds

- coverage_content_type :

- coordinate

- standard_name :

- time

array(['2000-01-16T12:00:00.000000000'], dtype='datetime64[ns]')

- XC(tile, j, i)float32dask.array<chunksize=(13, 90, 90), meta=np.ndarray>

- long_name :

- longitude of tracer grid cell center

- units :

- degrees_east

- coordinate :

- YC XC

- bounds :

- XC_bnds

- comment :

- nonuniform grid spacing

- coverage_content_type :

- coordinate

- standard_name :

- longitude

Array Chunk Bytes 411.33 kiB 411.33 kiB Shape (13, 90, 90) (13, 90, 90) Count 2 Tasks 1 Chunks Type float32 numpy.ndarray - YC(tile, j, i)float32dask.array<chunksize=(13, 90, 90), meta=np.ndarray>

- long_name :

- latitude of tracer grid cell center

- units :

- degrees_north

- coordinate :

- YC XC

- bounds :

- YC_bnds

- comment :

- nonuniform grid spacing

- coverage_content_type :

- coordinate

- standard_name :

- latitude

Array Chunk Bytes 411.33 kiB 411.33 kiB Shape (13, 90, 90) (13, 90, 90) Count 2 Tasks 1 Chunks Type float32 numpy.ndarray - XG(tile, j_g, i_g)float32dask.array<chunksize=(13, 90, 90), meta=np.ndarray>

- long_name :

- longitude of 'southwest' corner of tracer grid cell

- units :

- degrees_east

- coordinate :

- YG XG

- comment :

- Nonuniform grid spacing. Note: 'southwest' does not correspond to geographic orientation but is used for convenience to describe the computational grid. See MITgcm dcoumentation for details.

- coverage_content_type :

- coordinate

- standard_name :

- longitude

Array Chunk Bytes 411.33 kiB 411.33 kiB Shape (13, 90, 90) (13, 90, 90) Count 2 Tasks 1 Chunks Type float32 numpy.ndarray - YG(tile, j_g, i_g)float32dask.array<chunksize=(13, 90, 90), meta=np.ndarray>

- long_name :

- latitude of 'southwest' corner of tracer grid cell

- units :

- degrees_north

- coordinate :

- YG XG

- comment :

- Nonuniform grid spacing. Note: 'southwest' does not correspond to geographic orientation but is used for convenience to describe the computational grid. See MITgcm dcoumentation for details.

- coverage_content_type :

- coordinate

- standard_name :

- latitude

Array Chunk Bytes 411.33 kiB 411.33 kiB Shape (13, 90, 90) (13, 90, 90) Count 2 Tasks 1 Chunks Type float32 numpy.ndarray - Z(k)float32dask.array<chunksize=(50,), meta=np.ndarray>

- long_name :

- depth of tracer grid cell center

- units :

- m

- positive :

- up

- bounds :

- Z_bnds

- comment :

- Non-uniform vertical spacing.

- coverage_content_type :

- coordinate

- standard_name :

- depth

Array Chunk Bytes 200 B 200 B Shape (50,) (50,) Count 2 Tasks 1 Chunks Type float32 numpy.ndarray - Zp1(k_p1)float32dask.array<chunksize=(51,), meta=np.ndarray>

- long_name :

- depth of tracer grid cell interface

- units :

- m

- positive :

- up

- comment :

- Contains one element more than the number of vertical layers. First element is 0m, the depth of the upper interface of the surface grid cell. Last element is the depth of the lower interface of the deepest grid cell.

- coverage_content_type :

- coordinate

- standard_name :

- depth

Array Chunk Bytes 204 B 204 B Shape (51,) (51,) Count 2 Tasks 1 Chunks Type float32 numpy.ndarray - Zu(k_u)float32dask.array<chunksize=(50,), meta=np.ndarray>

- units :

- m

- positive :

- up

- coverage_content_type :

- coordinate

- standard_name :

- depth

- long_name :

- depth of the bottom face of tracer grid cells

- comment :

- First element is -10m, the depth of the bottom face of the first tracer grid cell. Last element is the depth of the bottom face of the deepest grid cell. The use of 'u' in the variable name follows the MITgcm convention for ocean variables in which the upper (u) face of a tracer grid cell on the logical grid corresponds to the bottom face of the grid cell on the physical grid. In other words, the logical vertical grid of MITgcm ocean variables is inverted relative to the physical vertical grid.

Array Chunk Bytes 200 B 200 B Shape (50,) (50,) Count 2 Tasks 1 Chunks Type float32 numpy.ndarray - Zl(k_l)float32dask.array<chunksize=(50,), meta=np.ndarray>

- units :

- m

- positive :

- up

- coverage_content_type :

- coordinate

- standard_name :

- depth

- long_name :

- depth of the top face of tracer grid cells

- comment :

- First element is 0m, the depth of the top face of the first tracer grid cell (ocean surface). Last element is the depth of the top face of the deepest grid cell. The use of 'l' in the variable name follows the MITgcm convention for ocean variables in which the lower (l) face of a tracer grid cell on the logical grid corresponds to the top face of the grid cell on the physical grid. In other words, the logical vertical grid of MITgcm ocean variables is inverted relative to the physical vertical grid.

Array Chunk Bytes 200 B 200 B Shape (50,) (50,) Count 2 Tasks 1 Chunks Type float32 numpy.ndarray - time_bnds(time, nv)datetime64[ns]dask.array<chunksize=(1, 2), meta=np.ndarray>

- comment :

- Start and end times of averaging period.

- coverage_content_type :

- coordinate

- long_name :

- time bounds of averaging period

Array Chunk Bytes 16 B 16 B Shape (1, 2) (1, 2) Count 2 Tasks 1 Chunks Type datetime64[ns] numpy.ndarray - XC_bnds(tile, j, i, nb)float32dask.array<chunksize=(13, 90, 90, 4), meta=np.ndarray>

- comment :

- Bounds array follows CF conventions. XC_bnds[i,j,0] = 'southwest' corner (j-1, i-1), XC_bnds[i,j,1] = 'southeast' corner (j-1, i+1), XC_bnds[i,j,2] = 'northeast' corner (j+1, i+1), XC_bnds[i,j,3] = 'northwest' corner (j+1, i-1). Note: 'southwest', 'southeast', northwest', and 'northeast' do not correspond to geographic orientation but are used for convenience to describe the computational grid. See MITgcm dcoumentation for details.

- coverage_content_type :

- coordinate

- long_name :

- longitudes of tracer grid cell corners

Array Chunk Bytes 1.61 MiB 1.61 MiB Shape (13, 90, 90, 4) (13, 90, 90, 4) Count 2 Tasks 1 Chunks Type float32 numpy.ndarray - YC_bnds(tile, j, i, nb)float32dask.array<chunksize=(13, 90, 90, 4), meta=np.ndarray>

- comment :

- Bounds array follows CF conventions. YC_bnds[i,j,0] = 'southwest' corner (j-1, i-1), YC_bnds[i,j,1] = 'southeast' corner (j-1, i+1), YC_bnds[i,j,2] = 'northeast' corner (j+1, i+1), YC_bnds[i,j,3] = 'northwest' corner (j+1, i-1). Note: 'southwest', 'southeast', northwest', and 'northeast' do not correspond to geographic orientation but are used for convenience to describe the computational grid. See MITgcm dcoumentation for details.

- coverage_content_type :

- coordinate

- long_name :

- latitudes of tracer grid cell corners

Array Chunk Bytes 1.61 MiB 1.61 MiB Shape (13, 90, 90, 4) (13, 90, 90, 4) Count 2 Tasks 1 Chunks Type float32 numpy.ndarray - Z_bnds(k, nv)float32dask.array<chunksize=(50, 2), meta=np.ndarray>

- comment :

- One pair of depths for each vertical level.

- coverage_content_type :

- coordinate

- long_name :

- depths of tracer grid cell upper and lower interfaces

Array Chunk Bytes 400 B 400 B Shape (50, 2) (50, 2) Count 2 Tasks 1 Chunks Type float32 numpy.ndarray

- RHOAnoma(time, k, tile, j, i)float32dask.array<chunksize=(1, 50, 13, 90, 90), meta=np.ndarray>

- long_name :

- In-situ seawater density anomaly

- units :

- kg m-3

- coverage_content_type :

- modelResult

- valid_min :

- -18.81316375732422

- valid_max :

- 25.540061950683594

- comment :

- In-situ seawater density anomaly relative to the reference density, rhoConst. rhoConst = 1029 kg m-3

Array Chunk Bytes 20.08 MiB 20.08 MiB Shape (1, 50, 13, 90, 90) (1, 50, 13, 90, 90) Count 2 Tasks 1 Chunks Type float32 numpy.ndarray - DRHODR(time, k_l, tile, j, i)float32dask.array<chunksize=(1, 50, 13, 90, 90), meta=np.ndarray>

- long_name :

- Density stratification

- units :

- kg m-3 m-1

- coverage_content_type :

- modelResult

- comment :

- Density stratification: d(sigma) d z-1. Note: density computations are done with in-situ density. The vertical derivatives of in-situ density and locally-referenced potential density are identical The equation of state is a modified UNESCO formula by Jackett and McDougall (1995), which uses the model variable potential temperature as input assuming a horizontally and temporally constant pressure of $p_0=-g \rho_{0} z$.

- valid_min :

- -0.7393171787261963

- valid_max :

- 3.208351699868217e-05

Array Chunk Bytes 20.08 MiB 20.08 MiB Shape (1, 50, 13, 90, 90) (1, 50, 13, 90, 90) Count 2 Tasks 1 Chunks Type float32 numpy.ndarray - PHIHYD(time, k, tile, j, i)float32dask.array<chunksize=(1, 50, 13, 90, 90), meta=np.ndarray>

- long_name :

- Ocean hydrostatic pressure anomaly

- units :

- m2 s-2

- coverage_content_type :

- modelResult

- comment :

- PHIHYD = p(k) / rhoConst - g z*(k,t), where p = hydrostatic ocean pressure at depth level k, rhoConst = reference density (1029 kg m-3), g is acceleration due to gravity (9.81 m s-2), and z*(k,t) is model depth at level k and time t. Units: p:[kg m-1 s-2], rhoConst:[kg m-3], g:[m s-2], H(t):[m]. Note: includes atmospheric pressure loading. Quantity referred to in some contexts as hydrostatic pressure anomaly. PHIBOT accounts for the model's time-varying grid cell thickness (z* coordinate system). See PHIHYDcR for hydrostatic pressure potential anomaly calculated using time-invariant grid cell thicknesses. PHIHYD is NOT corrected for global mean steric sea level changes related to density changes in the Boussinesq volume-conserving model (Greatbatch correction, see sterGloH).

- valid_min :

- 79.53374481201172

- valid_max :

- 783.2001953125

Array Chunk Bytes 20.08 MiB 20.08 MiB Shape (1, 50, 13, 90, 90) (1, 50, 13, 90, 90) Count 2 Tasks 1 Chunks Type float32 numpy.ndarray - PHIHYDcR(time, k, tile, j, i)float32dask.array<chunksize=(1, 50, 13, 90, 90), meta=np.ndarray>

- long_name :

- Ocean hydrostatic pressure anomaly at constant depths

- units :

- m2 s-2

- coverage_content_type :

- modelResult

- comment :

- PHIHYD = p(k) / rhoConst - g z(k,t), where p = hydrostatic ocean pressure at depth level k, rhoConst = reference density (1029 kg m-3), g is acceleration due to gravity (9.81 m s-2), and z(k,t) is fixed model depth at level k. Units: p:[kg m-1 s-2], rhoConst:[kg m-3], g:[m s-2], H(t):[m]. Note: includes atmospheric pressure loading. Quantity referred to in some contexts as hydrostatic pressure potential anomaly. PHIHYDcR is calculated with respect to the model's initial, time-invariant grid cell thicknesses. See PHIHYD for hydrostatic pressure anomaly calculated using model's time-variable grid cell thicknesses (z* coordinate system). PHIHYDcR is is NOT corrected for global mean steric sea level changes related to density changes in the Boussinesq volume-conserving model (Greatbatch correction, see sterGloH).

- valid_min :

- 78.17041778564453

- valid_max :

- 783.6217041015625

Array Chunk Bytes 20.08 MiB 20.08 MiB Shape (1, 50, 13, 90, 90) (1, 50, 13, 90, 90) Count 2 Tasks 1 Chunks Type float32 numpy.ndarray

- acknowledgement :

- This research was carried out by the Jet Propulsion Laboratory, managed by the California Institute of Technology under a contract with the National Aeronautics and Space Administration.

- author :

- Ian Fenty and Ou Wang

- cdm_data_type :

- Grid

- comment :

- Fields provided on the curvilinear lat-lon-cap 90 (llc90) native grid used in the ECCO model.

- Conventions :

- CF-1.8, ACDD-1.3

- coordinates_comment :

- Note: the global 'coordinates' attribute describes auxillary coordinates.

- creator_email :

- ecco-group@mit.edu

- creator_institution :

- NASA Jet Propulsion Laboratory (JPL)

- creator_name :

- ECCO Consortium

- creator_type :

- group

- creator_url :

- https://ecco-group.org

- date_created :

- 2020-12-18T14:40:54

- date_issued :

- 2020-12-18T14:40:54

- date_metadata_modified :

- 2021-03-15T21:55:10

- date_modified :

- 2021-03-15T21:55:10

- geospatial_bounds_crs :

- EPSG:4326

- geospatial_lat_max :

- 90.0

- geospatial_lat_min :

- -90.0

- geospatial_lat_resolution :

- variable

- geospatial_lat_units :

- degrees_north

- geospatial_lon_max :

- 180.0

- geospatial_lon_min :

- -180.0

- geospatial_lon_resolution :

- variable

- geospatial_lon_units :

- degrees_east

- geospatial_vertical_max :

- 0.0

- geospatial_vertical_min :

- -6134.5

- geospatial_vertical_positive :

- up

- geospatial_vertical_resolution :

- variable

- geospatial_vertical_units :

- meter

- history :

- Inaugural release of an ECCO Central Estimate solution to PO.DAAC

- id :

- 10.5067/ECL5M-ODE44

- institution :

- NASA Jet Propulsion Laboratory (JPL)

- instrument_vocabulary :

- GCMD instrument keywords

- keywords :

- EARTH SCIENCE > OCEANS > OCEAN PRESSURE > WATER PRESSURE, EARTH SCIENCE SERVICES > MODELS > EARTH SCIENCE REANALYSES/ASSIMILATION MODELS, EARTH SCIENCE > OCEANS > OCEAN PRESSURE, EARTH SCIENCE > OCEANS > SALINITY/DENSITY > DENSITY, EARTH SCIENCE > OCEANS > SALINITY/DENSITY

- keywords_vocabulary :

- NASA Global Change Master Directory (GCMD) Science Keywords

- license :

- Public Domain

- metadata_link :

- https://cmr.earthdata.nasa.gov/search/collections.umm_json?ShortName=ECCO_L4_DENS_STRAT_PRESS_LLC0090GRID_MONTHLY_V4R4

- naming_authority :

- gov.nasa.jpl

- platform :

- ERS-1/2, TOPEX/Poseidon, Geosat Follow-On (GFO), ENVISAT, Jason-1, Jason-2, CryoSat-2, SARAL/AltiKa, Jason-3, AVHRR, Aquarius, SSM/I, SSMIS, GRACE, DTU17MDT, Argo, WOCE, GO-SHIP, MEOP, Ice Tethered Profilers (ITP)

- platform_vocabulary :

- GCMD platform keywords

- processing_level :

- L4

- product_name :

- OCEAN_DENS_STRAT_PRESS_mon_mean_2000-01_ECCO_V4r4_native_llc0090.nc

- product_time_coverage_end :

- 2018-01-01T00:00:00

- product_time_coverage_start :

- 1992-01-01T12:00:00

- product_version :

- Version 4, Release 4

- program :

- NASA Physical Oceanography, Cryosphere, Modeling, Analysis, and Prediction (MAP)

- project :

- Estimating the Circulation and Climate of the Ocean (ECCO)

- publisher_email :

- podaac@podaac.jpl.nasa.gov

- publisher_institution :

- PO.DAAC

- publisher_name :

- Physical Oceanography Distributed Active Archive Center (PO.DAAC)

- publisher_type :

- institution

- publisher_url :

- https://podaac.jpl.nasa.gov

- references :

- ECCO Consortium, Fukumori, I., Wang, O., Fenty, I., Forget, G., Heimbach, P., & Ponte, R. M. 2020. Synopsis of the ECCO Central Production Global Ocean and Sea-Ice State Estimate (Version 4 Release 4). doi:10.5281/zenodo.3765928

- source :

- The ECCO V4r4 state estimate was produced by fitting a free-running solution of the MITgcm (checkpoint 66g) to satellite and in situ observational data in a least squares sense using the adjoint method

- standard_name_vocabulary :

- NetCDF Climate and Forecast (CF) Metadata Convention

- summary :

- This dataset provides monthly-averaged ocean density, stratification, and hydrostatic pressure on the lat-lon-cap 90 (llc90) native model grid from the ECCO Version 4 Release 4 (V4r4) ocean and sea-ice state estimate. Estimating the Circulation and Climate of the Ocean (ECCO) state estimates are dynamically and kinematically-consistent reconstructions of the three-dimensional, time-evolving ocean, sea-ice, and surface atmospheric states. ECCO V4r4 is a free-running solution of a global, nominally 1-degree configuration of the MIT general circulation model (MITgcm) that has been fit to observations in a least-squares sense. Observational data constraints used in V4r4 include sea surface height (SSH) from satellite altimeters [ERS-1/2, TOPEX/Poseidon, GFO, ENVISAT, Jason-1,2,3, CryoSat-2, and SARAL/AltiKa]; sea surface temperature (SST) from satellite radiometers [AVHRR], sea surface salinity (SSS) from the Aquarius satellite radiometer/scatterometer, ocean bottom pressure (OBP) from the GRACE satellite gravimeter; sea-ice concentration from satellite radiometers [SSM/I and SSMIS], and in-situ ocean temperature and salinity measured with conductivity-temperature-depth (CTD) sensors and expendable bathythermographs (XBTs) from several programs [e.g., WOCE, GO-SHIP, Argo, and others] and platforms [e.g., research vessels, gliders, moorings, ice-tethered profilers, and instrumented pinnipeds]. V4r4 covers the period 1992-01-01T12:00:00 to 2018-01-01T00:00:00.

- time_coverage_duration :

- P1M

- time_coverage_end :

- 2000-02-01T00:00:00

- time_coverage_resolution :

- P1M

- time_coverage_start :

- 2000-01-01T00:00:00

- title :

- ECCO Ocean Density, Stratification, and Hydrostatic Pressure - Monthly Mean llc90 Grid (Version 4 Release 4)

- uuid :

- 166a1992-4182-11eb-82f9-0cc47a3f43f9

In the summary above, the boldface coordinates are also dimensions, used to index and locate the other variables in the dataset. For the remaining variables, the 2nd column indicates the dimensions of each gridded variable. For example, most of the “data variables” are indexed on coordinates (time, k, tile, j, i). The global grid of ECCO is made up of 13 “tiles” with 90x90 horizontal grid cells each (see this tutorial for more information on the ECCO grid). Time has length 1 (Jan 2000), k (depth) has length 50, tile has length 13, and j and i each have lengths 90.

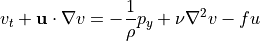

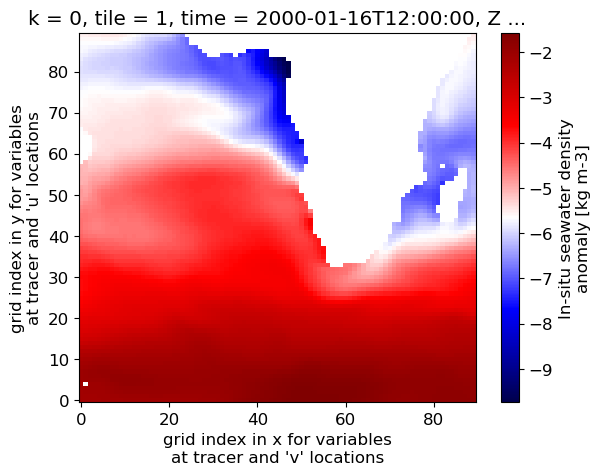

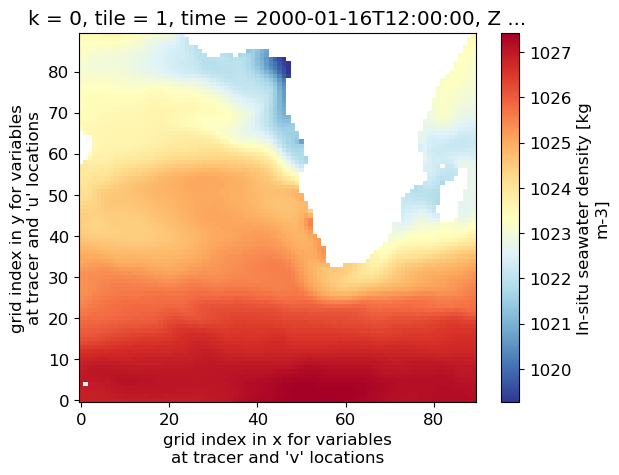

Now let’s plot density anomaly at the surface on a single tile. Python indexing (unlike Matlab or some other programming languages) starts at zero, so the surface index is k=0, and we will plot tile=1 (the 2nd of 13 tiles). For this plot we’ll use the 'seismic' colormap.

Tip: The Python plotting package we are using (Matplotlib) provides a number of named colormaps, and you can also create your own.

[8]:

densanom = ds_denspress_mo.RHOAnoma

densanom_surf = densanom.isel(k=0)

plt.rcParams["font.size"] = 12 # set default font size for plots in this tutorial

densanom_surf_plot = (densanom_surf.isel(tile=1)).plot(cmap='seismic')

So that’s one quick and easy way to get a look at a map of ECCO fields (at least on a single tile). The xarray package is very convenient to use with netCDF files as it retains data and coordinate labels/attributes even as you plot and manipulate the data.

But wait a moment…why are all of the density values negative?? The variable that we just plotted is the “in-situ seawater density anomaly”. But anomaly relative to what? Let’s consult the attributes for this variable, contained in the variable’s DataArray.

Tip: When a variable is subsetted from an xarray DataSet, an xarray DataArrray object is created. An xarray DataArray contains only one data variable, but retains coordinates and attributes from the parent Dataset.

[9]:

densanom

[9]:

<xarray.DataArray 'RHOAnoma' (time: 1, k: 50, tile: 13, j: 90, i: 90)>

dask.array<open_dataset-6c17e73e5cb26eda857fa930f66a3fd1RHOAnoma, shape=(1, 50, 13, 90, 90), dtype=float32, chunksize=(1, 50, 13, 90, 90), chunktype=numpy.ndarray>

Coordinates:

* i (i) int32 0 1 2 3 4 5 6 7 8 9 10 ... 80 81 82 83 84 85 86 87 88 89

* j (j) int32 0 1 2 3 4 5 6 7 8 9 10 ... 80 81 82 83 84 85 86 87 88 89

* k (k) int32 0 1 2 3 4 5 6 7 8 9 10 ... 40 41 42 43 44 45 46 47 48 49

* tile (tile) int32 0 1 2 3 4 5 6 7 8 9 10 11 12

* time (time) datetime64[ns] 2000-01-16T12:00:00

XC (tile, j, i) float32 dask.array<chunksize=(13, 90, 90), meta=np.ndarray>

YC (tile, j, i) float32 dask.array<chunksize=(13, 90, 90), meta=np.ndarray>

Z (k) float32 dask.array<chunksize=(50,), meta=np.ndarray>

Attributes:

long_name: In-situ seawater density anomaly

units: kg m-3

coverage_content_type: modelResult

valid_min: -18.81316375732422

valid_max: 25.540061950683594

comment: In-situ seawater density anomaly relative to the ...- time: 1

- k: 50

- tile: 13

- j: 90

- i: 90

- dask.array<chunksize=(1, 50, 13, 90, 90), meta=np.ndarray>

Array Chunk Bytes 20.08 MiB 20.08 MiB Shape (1, 50, 13, 90, 90) (1, 50, 13, 90, 90) Count 2 Tasks 1 Chunks Type float32 numpy.ndarray - i(i)int320 1 2 3 4 5 6 ... 84 85 86 87 88 89

- axis :

- X

- long_name :

- grid index in x for variables at tracer and 'v' locations

- swap_dim :

- XC

- comment :

- In the Arakawa C-grid system, tracer (e.g., THETA) and 'v' variables (e.g., VVEL) have the same x coordinate on the model grid.

- coverage_content_type :

- coordinate

array([ 0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31, 32, 33, 34, 35, 36, 37, 38, 39, 40, 41, 42, 43, 44, 45, 46, 47, 48, 49, 50, 51, 52, 53, 54, 55, 56, 57, 58, 59, 60, 61, 62, 63, 64, 65, 66, 67, 68, 69, 70, 71, 72, 73, 74, 75, 76, 77, 78, 79, 80, 81, 82, 83, 84, 85, 86, 87, 88, 89]) - j(j)int320 1 2 3 4 5 6 ... 84 85 86 87 88 89

- axis :

- Y

- long_name :

- grid index in y for variables at tracer and 'u' locations

- swap_dim :

- YC

- comment :

- In the Arakawa C-grid system, tracer (e.g., THETA) and 'u' variables (e.g., UVEL) have the same y coordinate on the model grid.

- coverage_content_type :

- coordinate

array([ 0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31, 32, 33, 34, 35, 36, 37, 38, 39, 40, 41, 42, 43, 44, 45, 46, 47, 48, 49, 50, 51, 52, 53, 54, 55, 56, 57, 58, 59, 60, 61, 62, 63, 64, 65, 66, 67, 68, 69, 70, 71, 72, 73, 74, 75, 76, 77, 78, 79, 80, 81, 82, 83, 84, 85, 86, 87, 88, 89]) - k(k)int320 1 2 3 4 5 6 ... 44 45 46 47 48 49

- axis :

- Z

- long_name :

- grid index in z for tracer variables

- swap_dim :

- Z

- coverage_content_type :

- coordinate

array([ 0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31, 32, 33, 34, 35, 36, 37, 38, 39, 40, 41, 42, 43, 44, 45, 46, 47, 48, 49]) - tile(tile)int320 1 2 3 4 5 6 7 8 9 10 11 12

- long_name :

- lat-lon-cap tile index

- comment :

- The ECCO V4 horizontal model grid is divided into 13 tiles of 90x90 cells for convenience.

- coverage_content_type :

- coordinate

array([ 0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12])

- time(time)datetime64[ns]2000-01-16T12:00:00

- long_name :

- center time of averaging period

- axis :

- T

- bounds :

- time_bnds

- coverage_content_type :

- coordinate

- standard_name :

- time

array(['2000-01-16T12:00:00.000000000'], dtype='datetime64[ns]')

- XC(tile, j, i)float32dask.array<chunksize=(13, 90, 90), meta=np.ndarray>

- long_name :

- longitude of tracer grid cell center

- units :

- degrees_east

- coordinate :

- YC XC

- bounds :

- XC_bnds

- comment :

- nonuniform grid spacing

- coverage_content_type :

- coordinate

- standard_name :

- longitude

Array Chunk Bytes 411.33 kiB 411.33 kiB Shape (13, 90, 90) (13, 90, 90) Count 2 Tasks 1 Chunks Type float32 numpy.ndarray - YC(tile, j, i)float32dask.array<chunksize=(13, 90, 90), meta=np.ndarray>

- long_name :

- latitude of tracer grid cell center

- units :

- degrees_north

- coordinate :

- YC XC

- bounds :

- YC_bnds

- comment :

- nonuniform grid spacing

- coverage_content_type :

- coordinate

- standard_name :

- latitude

Array Chunk Bytes 411.33 kiB 411.33 kiB Shape (13, 90, 90) (13, 90, 90) Count 2 Tasks 1 Chunks Type float32 numpy.ndarray - Z(k)float32dask.array<chunksize=(50,), meta=np.ndarray>

- long_name :

- depth of tracer grid cell center

- units :

- m

- positive :

- up

- bounds :

- Z_bnds

- comment :

- Non-uniform vertical spacing.

- coverage_content_type :

- coordinate

- standard_name :

- depth

Array Chunk Bytes 200 B 200 B Shape (50,) (50,) Count 2 Tasks 1 Chunks Type float32 numpy.ndarray

- long_name :

- In-situ seawater density anomaly

- units :

- kg m-3

- coverage_content_type :

- modelResult

- valid_min :

- -18.81316375732422

- valid_max :

- 25.540061950683594

- comment :

- In-situ seawater density anomaly relative to the reference density, rhoConst. rhoConst = 1029 kg m-3

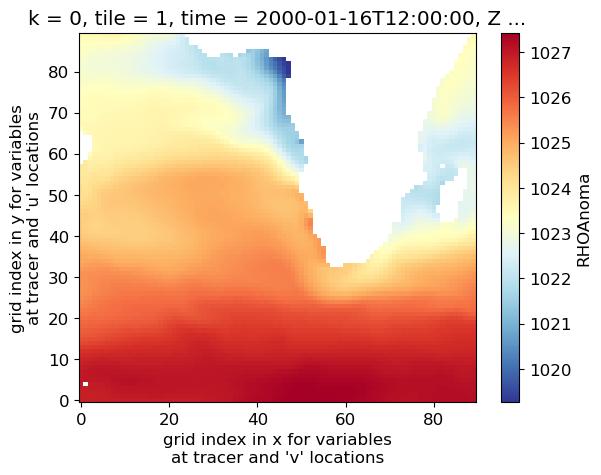

Note the attribute “comment” at the bottom. The density anomaly is relative to a constant value, 1029 kg m-3. So if we need the actual density value, we add 1029 to the density anomaly.

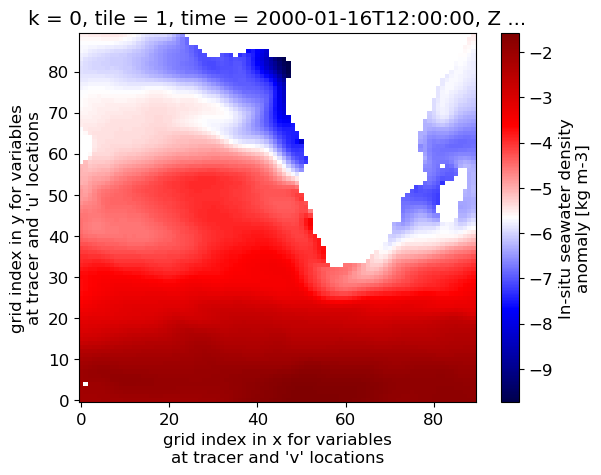

[10]:

rhoConst = 1029.

dens = rhoConst + densanom

dens_surf = dens.isel(k=0)

dens_surf_plot = (dens_surf.isel(tile=1)).plot(cmap='RdYlBu_r') # trying out another colormap

The map looks the same, but the colorbar labels are now actual density values. However the colorbar label, which previously was assigned automatically by xarray’s plot function, got confused since we created a new data array (density) from the old array (density anomaly). We can update variable name and attributes in the dens DataArray accordingly.

[11]:

dens.name = 'RHO'

dens.attrs.update({'long_name': 'In-situ seawater density', 'units': 'kg m-3'})

# re-plot surface density

dens_surf = dens.isel(k=0)

dens_surf_plot = (dens_surf.isel(tile=1)).plot(cmap='RdYlBu_r')

Now let’s plot pressure anomaly, and for a different depth level, k=20 (the 21st depth level counting from the surface).

[12]:

pressanom = ds_denspress_mo.PHIHYDcR

k_plot = 20

pressanom_plot = (pressanom.isel(tile=1,k=k_plot)).plot(cmap='RdYlBu_r')

# change plot title to show depth of plotted level

depth_plot_level = -pressanom.Z[k_plot].values # sign change, so depth is given as positive number

plt.title('Pressure anomaly at ' + str(int(depth_plot_level)) + ' meters depth')

plt.show()

As you can see, the units are different from standard pressure units (which would be N m-2 or kg m-1 s-2). Consult the attributes for this variable:

[13]:

pressanom

[13]:

<xarray.DataArray 'PHIHYDcR' (time: 1, k: 50, tile: 13, j: 90, i: 90)>

dask.array<open_dataset-6c17e73e5cb26eda857fa930f66a3fd1PHIHYDcR, shape=(1, 50, 13, 90, 90), dtype=float32, chunksize=(1, 50, 13, 90, 90), chunktype=numpy.ndarray>

Coordinates:

* i (i) int32 0 1 2 3 4 5 6 7 8 9 10 ... 80 81 82 83 84 85 86 87 88 89

* j (j) int32 0 1 2 3 4 5 6 7 8 9 10 ... 80 81 82 83 84 85 86 87 88 89

* k (k) int32 0 1 2 3 4 5 6 7 8 9 10 ... 40 41 42 43 44 45 46 47 48 49

* tile (tile) int32 0 1 2 3 4 5 6 7 8 9 10 11 12

* time (time) datetime64[ns] 2000-01-16T12:00:00

XC (tile, j, i) float32 dask.array<chunksize=(13, 90, 90), meta=np.ndarray>

YC (tile, j, i) float32 dask.array<chunksize=(13, 90, 90), meta=np.ndarray>

Z (k) float32 dask.array<chunksize=(50,), meta=np.ndarray>

Attributes:

long_name: Ocean hydrostatic pressure anomaly at constant de...

units: m2 s-2

coverage_content_type: modelResult

comment: PHIHYD = p(k) / rhoConst - g z(k,t), where p = hy...

valid_min: 78.17041778564453

valid_max: 783.6217041015625- time: 1

- k: 50

- tile: 13

- j: 90

- i: 90

- dask.array<chunksize=(1, 50, 13, 90, 90), meta=np.ndarray>

Array Chunk Bytes 20.08 MiB 20.08 MiB Shape (1, 50, 13, 90, 90) (1, 50, 13, 90, 90) Count 2 Tasks 1 Chunks Type float32 numpy.ndarray - i(i)int320 1 2 3 4 5 6 ... 84 85 86 87 88 89

- axis :

- X

- long_name :

- grid index in x for variables at tracer and 'v' locations

- swap_dim :

- XC

- comment :

- In the Arakawa C-grid system, tracer (e.g., THETA) and 'v' variables (e.g., VVEL) have the same x coordinate on the model grid.

- coverage_content_type :

- coordinate

array([ 0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31, 32, 33, 34, 35, 36, 37, 38, 39, 40, 41, 42, 43, 44, 45, 46, 47, 48, 49, 50, 51, 52, 53, 54, 55, 56, 57, 58, 59, 60, 61, 62, 63, 64, 65, 66, 67, 68, 69, 70, 71, 72, 73, 74, 75, 76, 77, 78, 79, 80, 81, 82, 83, 84, 85, 86, 87, 88, 89]) - j(j)int320 1 2 3 4 5 6 ... 84 85 86 87 88 89

- axis :

- Y

- long_name :

- grid index in y for variables at tracer and 'u' locations

- swap_dim :

- YC

- comment :

- In the Arakawa C-grid system, tracer (e.g., THETA) and 'u' variables (e.g., UVEL) have the same y coordinate on the model grid.

- coverage_content_type :

- coordinate

array([ 0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31, 32, 33, 34, 35, 36, 37, 38, 39, 40, 41, 42, 43, 44, 45, 46, 47, 48, 49, 50, 51, 52, 53, 54, 55, 56, 57, 58, 59, 60, 61, 62, 63, 64, 65, 66, 67, 68, 69, 70, 71, 72, 73, 74, 75, 76, 77, 78, 79, 80, 81, 82, 83, 84, 85, 86, 87, 88, 89]) - k(k)int320 1 2 3 4 5 6 ... 44 45 46 47 48 49

- axis :

- Z

- long_name :

- grid index in z for tracer variables

- swap_dim :

- Z

- coverage_content_type :

- coordinate

array([ 0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31, 32, 33, 34, 35, 36, 37, 38, 39, 40, 41, 42, 43, 44, 45, 46, 47, 48, 49]) - tile(tile)int320 1 2 3 4 5 6 7 8 9 10 11 12

- long_name :

- lat-lon-cap tile index

- comment :

- The ECCO V4 horizontal model grid is divided into 13 tiles of 90x90 cells for convenience.

- coverage_content_type :

- coordinate

array([ 0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12])

- time(time)datetime64[ns]2000-01-16T12:00:00

- long_name :

- center time of averaging period

- axis :

- T

- bounds :

- time_bnds

- coverage_content_type :

- coordinate

- standard_name :

- time

array(['2000-01-16T12:00:00.000000000'], dtype='datetime64[ns]')

- XC(tile, j, i)float32dask.array<chunksize=(13, 90, 90), meta=np.ndarray>

- long_name :

- longitude of tracer grid cell center

- units :

- degrees_east

- coordinate :

- YC XC

- bounds :

- XC_bnds

- comment :

- nonuniform grid spacing

- coverage_content_type :

- coordinate

- standard_name :

- longitude

Array Chunk Bytes 411.33 kiB 411.33 kiB Shape (13, 90, 90) (13, 90, 90) Count 2 Tasks 1 Chunks Type float32 numpy.ndarray - YC(tile, j, i)float32dask.array<chunksize=(13, 90, 90), meta=np.ndarray>

- long_name :

- latitude of tracer grid cell center

- units :

- degrees_north

- coordinate :

- YC XC

- bounds :

- YC_bnds

- comment :

- nonuniform grid spacing

- coverage_content_type :

- coordinate

- standard_name :

- latitude

Array Chunk Bytes 411.33 kiB 411.33 kiB Shape (13, 90, 90) (13, 90, 90) Count 2 Tasks 1 Chunks Type float32 numpy.ndarray - Z(k)float32dask.array<chunksize=(50,), meta=np.ndarray>

- long_name :

- depth of tracer grid cell center

- units :

- m

- positive :

- up

- bounds :

- Z_bnds

- comment :

- Non-uniform vertical spacing.

- coverage_content_type :

- coordinate

- standard_name :

- depth

Array Chunk Bytes 200 B 200 B Shape (50,) (50,) Count 2 Tasks 1 Chunks Type float32 numpy.ndarray

- long_name :

- Ocean hydrostatic pressure anomaly at constant depths

- units :

- m2 s-2

- coverage_content_type :

- modelResult

- comment :

- PHIHYD = p(k) / rhoConst - g z(k,t), where p = hydrostatic ocean pressure at depth level k, rhoConst = reference density (1029 kg m-3), g is acceleration due to gravity (9.81 m s-2), and z(k,t) is fixed model depth at level k. Units: p:[kg m-1 s-2], rhoConst:[kg m-3], g:[m s-2], H(t):[m]. Note: includes atmospheric pressure loading. Quantity referred to in some contexts as hydrostatic pressure potential anomaly. PHIHYDcR is calculated with respect to the model's initial, time-invariant grid cell thicknesses. See PHIHYD for hydrostatic pressure anomaly calculated using model's time-variable grid cell thicknesses (z* coordinate system). PHIHYDcR is is NOT corrected for global mean steric sea level changes related to density changes in the Boussinesq volume-conserving model (Greatbatch correction, see sterGloH).

- valid_min :

- 78.17041778564453

- valid_max :

- 783.6217041015625

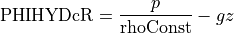

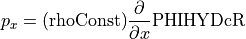

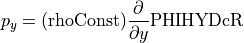

That’s a long comment! But what we need to know is that PHIHYDcR is related to the actual in-situ ocean pressure  by

by

where  is a depth coordinate, and rhoConst and

is a depth coordinate, and rhoConst and  are constants. Therefore, the

are constants. Therefore, the  term does not impact the computation of horizontal gradients of pressure, which is what we need for geostrophic balance.

term does not impact the computation of horizontal gradients of pressure, which is what we need for geostrophic balance.

Look at velocity file¶

Lastly we will need the actual ECCO velocity fields to compute the other side of the geostrophic balance equations. The files in the velocity dataset have 3 data variables (UVEL,VVEL,WVEL) corresponding to the components of three-dimensional velocities. You’ll notice that the velocity fields are each centered in a different location corresponding to an outer face of a grid cell, rather than the center (since the output is from an Arakawa C-grid model).

[14]:

# locate files to load

download_root_dir = join(user_home_dir,'Downloads','ECCO_V4r4_PODAAC')

download_dir = join(download_root_dir,vel_monthly_shortname)

curr_vel_files = list(glob.glob(join(download_dir,'*nc')))

print(f'number of files to load: {len(curr_vel_files)}')

# load file into workspace

# (in this case only 1 file, but this function can load multiple netCDF files with compatible dimensions)

ds_vel_mo = xr.open_mfdataset(curr_vel_files, parallel=True, data_vars='minimal',\

coords='minimal', compat='override')

# check the variables and their associated units

ds_vel_mo

number of files to load: 1

[14]:

<xarray.Dataset>

Dimensions: (i: 90, i_g: 90, j: 90, j_g: 90, k: 50, k_u: 50, k_l: 50, k_p1: 51, tile: 13, time: 1, nv: 2, nb: 4)

Coordinates: (12/22)

* i (i) int32 0 1 2 3 4 5 6 7 8 9 ... 80 81 82 83 84 85 86 87 88 89

* i_g (i_g) int32 0 1 2 3 4 5 6 7 8 9 ... 80 81 82 83 84 85 86 87 88 89

* j (j) int32 0 1 2 3 4 5 6 7 8 9 ... 80 81 82 83 84 85 86 87 88 89

* j_g (j_g) int32 0 1 2 3 4 5 6 7 8 9 ... 80 81 82 83 84 85 86 87 88 89

* k (k) int32 0 1 2 3 4 5 6 7 8 9 ... 40 41 42 43 44 45 46 47 48 49

* k_u (k_u) int32 0 1 2 3 4 5 6 7 8 9 ... 40 41 42 43 44 45 46 47 48 49

... ...

Zu (k_u) float32 dask.array<chunksize=(50,), meta=np.ndarray>

Zl (k_l) float32 dask.array<chunksize=(50,), meta=np.ndarray>

time_bnds (time, nv) datetime64[ns] dask.array<chunksize=(1, 2), meta=np.ndarray>

XC_bnds (tile, j, i, nb) float32 dask.array<chunksize=(13, 90, 90, 4), meta=np.ndarray>

YC_bnds (tile, j, i, nb) float32 dask.array<chunksize=(13, 90, 90, 4), meta=np.ndarray>

Z_bnds (k, nv) float32 dask.array<chunksize=(50, 2), meta=np.ndarray>

Dimensions without coordinates: nv, nb

Data variables:

UVEL (time, k, tile, j, i_g) float32 dask.array<chunksize=(1, 50, 13, 90, 90), meta=np.ndarray>

VVEL (time, k, tile, j_g, i) float32 dask.array<chunksize=(1, 50, 13, 90, 90), meta=np.ndarray>

WVEL (time, k_l, tile, j, i) float32 dask.array<chunksize=(1, 50, 13, 90, 90), meta=np.ndarray>

Attributes: (12/62)

acknowledgement: This research was carried out by the Jet...

author: Ian Fenty and Ou Wang

cdm_data_type: Grid

comment: Fields provided on the curvilinear lat-l...

Conventions: CF-1.8, ACDD-1.3

coordinates_comment: Note: the global 'coordinates' attribute...

... ...

time_coverage_duration: P1M

time_coverage_end: 2000-02-01T00:00:00

time_coverage_resolution: P1M

time_coverage_start: 2000-01-01T00:00:00

title: ECCO Ocean Velocity - Monthly Mean llc90...

uuid: 32bd652c-4182-11eb-bd5c-0cc47a3f82e7- i: 90

- i_g: 90

- j: 90

- j_g: 90

- k: 50

- k_u: 50

- k_l: 50

- k_p1: 51

- tile: 13

- time: 1

- nv: 2

- nb: 4

- i(i)int320 1 2 3 4 5 6 ... 84 85 86 87 88 89

- axis :

- X

- long_name :

- grid index in x for variables at tracer and 'v' locations

- swap_dim :

- XC

- comment :

- In the Arakawa C-grid system, tracer (e.g., THETA) and 'v' variables (e.g., VVEL) have the same x coordinate on the model grid.

- coverage_content_type :

- coordinate

array([ 0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31, 32, 33, 34, 35, 36, 37, 38, 39, 40, 41, 42, 43, 44, 45, 46, 47, 48, 49, 50, 51, 52, 53, 54, 55, 56, 57, 58, 59, 60, 61, 62, 63, 64, 65, 66, 67, 68, 69, 70, 71, 72, 73, 74, 75, 76, 77, 78, 79, 80, 81, 82, 83, 84, 85, 86, 87, 88, 89]) - i_g(i_g)int320 1 2 3 4 5 6 ... 84 85 86 87 88 89

- axis :

- X

- long_name :

- grid index in x for variables at 'u' and 'g' locations

- c_grid_axis_shift :

- -0.5

- swap_dim :

- XG

- comment :

- In the Arakawa C-grid system, 'u' (e.g., UVEL) and 'g' variables (e.g., XG) have the same x coordinate on the model grid.

- coverage_content_type :

- coordinate

array([ 0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31, 32, 33, 34, 35, 36, 37, 38, 39, 40, 41, 42, 43, 44, 45, 46, 47, 48, 49, 50, 51, 52, 53, 54, 55, 56, 57, 58, 59, 60, 61, 62, 63, 64, 65, 66, 67, 68, 69, 70, 71, 72, 73, 74, 75, 76, 77, 78, 79, 80, 81, 82, 83, 84, 85, 86, 87, 88, 89]) - j(j)int320 1 2 3 4 5 6 ... 84 85 86 87 88 89

- axis :

- Y

- long_name :

- grid index in y for variables at tracer and 'u' locations

- swap_dim :

- YC

- comment :

- In the Arakawa C-grid system, tracer (e.g., THETA) and 'u' variables (e.g., UVEL) have the same y coordinate on the model grid.

- coverage_content_type :

- coordinate

array([ 0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31, 32, 33, 34, 35, 36, 37, 38, 39, 40, 41, 42, 43, 44, 45, 46, 47, 48, 49, 50, 51, 52, 53, 54, 55, 56, 57, 58, 59, 60, 61, 62, 63, 64, 65, 66, 67, 68, 69, 70, 71, 72, 73, 74, 75, 76, 77, 78, 79, 80, 81, 82, 83, 84, 85, 86, 87, 88, 89]) - j_g(j_g)int320 1 2 3 4 5 6 ... 84 85 86 87 88 89

- axis :

- Y

- long_name :

- grid index in y for variables at 'v' and 'g' locations

- c_grid_axis_shift :

- -0.5

- swap_dim :

- YG

- comment :

- In the Arakawa C-grid system, 'v' (e.g., VVEL) and 'g' variables (e.g., XG) have the same y coordinate.

- coverage_content_type :

- coordinate

array([ 0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31, 32, 33, 34, 35, 36, 37, 38, 39, 40, 41, 42, 43, 44, 45, 46, 47, 48, 49, 50, 51, 52, 53, 54, 55, 56, 57, 58, 59, 60, 61, 62, 63, 64, 65, 66, 67, 68, 69, 70, 71, 72, 73, 74, 75, 76, 77, 78, 79, 80, 81, 82, 83, 84, 85, 86, 87, 88, 89]) - k(k)int320 1 2 3 4 5 6 ... 44 45 46 47 48 49

- axis :

- Z

- long_name :

- grid index in z for tracer variables

- swap_dim :

- Z

- coverage_content_type :

- coordinate

array([ 0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31, 32, 33, 34, 35, 36, 37, 38, 39, 40, 41, 42, 43, 44, 45, 46, 47, 48, 49]) - k_u(k_u)int320 1 2 3 4 5 6 ... 44 45 46 47 48 49

- axis :

- Z

- c_grid_axis_shift :

- 0.5

- swap_dim :

- Zu

- coverage_content_type :

- coordinate

- long_name :

- grid index in z corresponding to the bottom face of tracer grid cells ('w' locations)

- comment :

- First index corresponds to the bottom surface of the uppermost tracer grid cell. The use of 'u' in the variable name follows the MITgcm convention for ocean variables in which the upper (u) face of a tracer grid cell on the logical grid corresponds to the bottom face of the grid cell on the physical grid.

array([ 0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31, 32, 33, 34, 35, 36, 37, 38, 39, 40, 41, 42, 43, 44, 45, 46, 47, 48, 49]) - k_l(k_l)int320 1 2 3 4 5 6 ... 44 45 46 47 48 49

- axis :

- Z

- c_grid_axis_shift :

- -0.5

- swap_dim :

- Zl

- coverage_content_type :

- coordinate

- long_name :

- grid index in z corresponding to the top face of tracer grid cells ('w' locations)

- comment :

- First index corresponds to the top surface of the uppermost tracer grid cell. The use of 'l' in the variable name follows the MITgcm convention for ocean variables in which the lower (l) face of a tracer grid cell on the logical grid corresponds to the top face of the grid cell on the physical grid.

array([ 0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31, 32, 33, 34, 35, 36, 37, 38, 39, 40, 41, 42, 43, 44, 45, 46, 47, 48, 49]) - k_p1(k_p1)int320 1 2 3 4 5 6 ... 45 46 47 48 49 50

- axis :

- Z

- long_name :

- grid index in z for variables at 'w' locations

- c_grid_axis_shift :

- [-0.5 0.5]

- swap_dim :

- Zp1

- comment :

- Includes top of uppermost model tracer cell (k_p1=0) and bottom of lowermost tracer cell (k_p1=51).

- coverage_content_type :

- coordinate

array([ 0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31, 32, 33, 34, 35, 36, 37, 38, 39, 40, 41, 42, 43, 44, 45, 46, 47, 48, 49, 50]) - tile(tile)int320 1 2 3 4 5 6 7 8 9 10 11 12

- long_name :

- lat-lon-cap tile index

- comment :

- The ECCO V4 horizontal model grid is divided into 13 tiles of 90x90 cells for convenience.

- coverage_content_type :

- coordinate

array([ 0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12])

- time(time)datetime64[ns]2000-01-16T12:00:00

- long_name :

- center time of averaging period

- axis :

- T

- bounds :

- time_bnds

- coverage_content_type :

- coordinate

- standard_name :

- time

array(['2000-01-16T12:00:00.000000000'], dtype='datetime64[ns]')

- XC(tile, j, i)float32dask.array<chunksize=(13, 90, 90), meta=np.ndarray>

- long_name :

- longitude of tracer grid cell center

- units :

- degrees_east

- coordinate :

- YC XC

- bounds :

- XC_bnds

- comment :

- nonuniform grid spacing

- coverage_content_type :

- coordinate

- standard_name :

- longitude

Array Chunk Bytes 411.33 kiB 411.33 kiB Shape (13, 90, 90) (13, 90, 90) Count 2 Tasks 1 Chunks Type float32 numpy.ndarray - YC(tile, j, i)float32dask.array<chunksize=(13, 90, 90), meta=np.ndarray>

- long_name :

- latitude of tracer grid cell center

- units :

- degrees_north

- coordinate :

- YC XC

- bounds :

- YC_bnds

- comment :

- nonuniform grid spacing

- coverage_content_type :

- coordinate

- standard_name :

- latitude

Array Chunk Bytes 411.33 kiB 411.33 kiB Shape (13, 90, 90) (13, 90, 90) Count 2 Tasks 1 Chunks Type float32 numpy.ndarray - XG(tile, j_g, i_g)float32dask.array<chunksize=(13, 90, 90), meta=np.ndarray>

- long_name :

- longitude of 'southwest' corner of tracer grid cell

- units :

- degrees_east

- coordinate :

- YG XG

- comment :

- Nonuniform grid spacing. Note: 'southwest' does not correspond to geographic orientation but is used for convenience to describe the computational grid. See MITgcm dcoumentation for details.

- coverage_content_type :

- coordinate

- standard_name :

- longitude

Array Chunk Bytes 411.33 kiB 411.33 kiB Shape (13, 90, 90) (13, 90, 90) Count 2 Tasks 1 Chunks Type float32 numpy.ndarray - YG(tile, j_g, i_g)float32dask.array<chunksize=(13, 90, 90), meta=np.ndarray>

- long_name :

- latitude of 'southwest' corner of tracer grid cell

- units :

- degrees_north

- coordinate :

- YG XG

- comment :

- Nonuniform grid spacing. Note: 'southwest' does not correspond to geographic orientation but is used for convenience to describe the computational grid. See MITgcm dcoumentation for details.

- coverage_content_type :

- coordinate

- standard_name :

- latitude

Array Chunk Bytes 411.33 kiB 411.33 kiB Shape (13, 90, 90) (13, 90, 90) Count 2 Tasks 1 Chunks Type float32 numpy.ndarray - Z(k)float32dask.array<chunksize=(50,), meta=np.ndarray>

- long_name :

- depth of tracer grid cell center

- units :

- m

- positive :

- up

- bounds :

- Z_bnds

- comment :

- Non-uniform vertical spacing.

- coverage_content_type :

- coordinate

- standard_name :

- depth

Array Chunk Bytes 200 B 200 B Shape (50,) (50,) Count 2 Tasks 1 Chunks Type float32 numpy.ndarray - Zp1(k_p1)float32dask.array<chunksize=(51,), meta=np.ndarray>

- long_name :

- depth of tracer grid cell interface

- units :

- m

- positive :

- up

- comment :

- Contains one element more than the number of vertical layers. First element is 0m, the depth of the upper interface of the surface grid cell. Last element is the depth of the lower interface of the deepest grid cell.

- coverage_content_type :

- coordinate

- standard_name :

- depth

Array Chunk Bytes 204 B 204 B Shape (51,) (51,) Count 2 Tasks 1 Chunks Type float32 numpy.ndarray - Zu(k_u)float32dask.array<chunksize=(50,), meta=np.ndarray>

- units :

- m

- positive :

- up

- coverage_content_type :

- coordinate

- standard_name :

- depth

- long_name :

- depth of the bottom face of tracer grid cells

- comment :

- First element is -10m, the depth of the bottom face of the first tracer grid cell. Last element is the depth of the bottom face of the deepest grid cell. The use of 'u' in the variable name follows the MITgcm convention for ocean variables in which the upper (u) face of a tracer grid cell on the logical grid corresponds to the bottom face of the grid cell on the physical grid. In other words, the logical vertical grid of MITgcm ocean variables is inverted relative to the physical vertical grid.

Array Chunk Bytes 200 B 200 B Shape (50,) (50,) Count 2 Tasks 1 Chunks Type float32 numpy.ndarray - Zl(k_l)float32dask.array<chunksize=(50,), meta=np.ndarray>

- units :

- m

- positive :

- up

- coverage_content_type :

- coordinate

- standard_name :

- depth

- long_name :

- depth of the top face of tracer grid cells

- comment :

- First element is 0m, the depth of the top face of the first tracer grid cell (ocean surface). Last element is the depth of the top face of the deepest grid cell. The use of 'l' in the variable name follows the MITgcm convention for ocean variables in which the lower (l) face of a tracer grid cell on the logical grid corresponds to the top face of the grid cell on the physical grid. In other words, the logical vertical grid of MITgcm ocean variables is inverted relative to the physical vertical grid.

Array Chunk Bytes 200 B 200 B Shape (50,) (50,) Count 2 Tasks 1 Chunks Type float32 numpy.ndarray - time_bnds(time, nv)datetime64[ns]dask.array<chunksize=(1, 2), meta=np.ndarray>

- comment :

- Start and end times of averaging period.

- coverage_content_type :

- coordinate

- long_name :

- time bounds of averaging period

Array Chunk Bytes 16 B 16 B Shape (1, 2) (1, 2) Count 2 Tasks 1 Chunks Type datetime64[ns] numpy.ndarray - XC_bnds(tile, j, i, nb)float32dask.array<chunksize=(13, 90, 90, 4), meta=np.ndarray>

- comment :

- Bounds array follows CF conventions. XC_bnds[i,j,0] = 'southwest' corner (j-1, i-1), XC_bnds[i,j,1] = 'southeast' corner (j-1, i+1), XC_bnds[i,j,2] = 'northeast' corner (j+1, i+1), XC_bnds[i,j,3] = 'northwest' corner (j+1, i-1). Note: 'southwest', 'southeast', northwest', and 'northeast' do not correspond to geographic orientation but are used for convenience to describe the computational grid. See MITgcm dcoumentation for details.

- coverage_content_type :

- coordinate

- long_name :

- longitudes of tracer grid cell corners

Array Chunk Bytes 1.61 MiB 1.61 MiB Shape (13, 90, 90, 4) (13, 90, 90, 4) Count 2 Tasks 1 Chunks Type float32 numpy.ndarray - YC_bnds(tile, j, i, nb)float32dask.array<chunksize=(13, 90, 90, 4), meta=np.ndarray>

- comment :

- Bounds array follows CF conventions. YC_bnds[i,j,0] = 'southwest' corner (j-1, i-1), YC_bnds[i,j,1] = 'southeast' corner (j-1, i+1), YC_bnds[i,j,2] = 'northeast' corner (j+1, i+1), YC_bnds[i,j,3] = 'northwest' corner (j+1, i-1). Note: 'southwest', 'southeast', northwest', and 'northeast' do not correspond to geographic orientation but are used for convenience to describe the computational grid. See MITgcm dcoumentation for details.

- coverage_content_type :

- coordinate

- long_name :

- latitudes of tracer grid cell corners

Array Chunk Bytes 1.61 MiB 1.61 MiB Shape (13, 90, 90, 4) (13, 90, 90, 4) Count 2 Tasks 1 Chunks Type float32 numpy.ndarray - Z_bnds(k, nv)float32dask.array<chunksize=(50, 2), meta=np.ndarray>

- comment :

- One pair of depths for each vertical level.

- coverage_content_type :

- coordinate

- long_name :

- depths of tracer grid cell upper and lower interfaces

Array Chunk Bytes 400 B 400 B Shape (50, 2) (50, 2) Count 2 Tasks 1 Chunks Type float32 numpy.ndarray

- UVEL(time, k, tile, j, i_g)float32dask.array<chunksize=(1, 50, 13, 90, 90), meta=np.ndarray>

- long_name :

- Horizontal velocity in the model +x direction

- units :

- m s-1

- mate :

- VVEL

- coverage_content_type :

- modelResult

- direction :

- >0 increases volume

- standard_name :

- sea_water_x_velocity

- comment :

- Horizontal velocity in the +x direction at the 'u' face of the tracer cell on the native model grid. Note: in the Arakawa-C grid, horizontal velocities are staggered relative to the tracer cells with indexing such that +UVEL(i_g,j,k) corresponds to +x fluxes through the 'u' face of the tracer cell at (i,j,k). Do NOT use UVEL for volume flux calculations because the model's grid cell thicknesses vary with time (z* coordinates); use UVELMASS instead. Also, the model +x direction does not necessarily correspond to the geographical east-west direction because the x and y axes of the model's curvilinear lat-lon-cap (llc) grid have arbitrary orientations which vary within and across tiles. See EVEL and NVEL for zonal and meridional velocity.

- valid_min :

- -1.2937312126159668

- valid_max :

- 1.776294231414795

Array Chunk Bytes 20.08 MiB 20.08 MiB Shape (1, 50, 13, 90, 90) (1, 50, 13, 90, 90) Count 2 Tasks 1 Chunks Type float32 numpy.ndarray - VVEL(time, k, tile, j_g, i)float32dask.array<chunksize=(1, 50, 13, 90, 90), meta=np.ndarray>

- long_name :

- Horizontal velocity in the model +y direction

- units :

- m s-1

- mate :

- UVEL

- coverage_content_type :

- modelResult

- direction :

- >0 increases volume

- standard_name :

- sea_water_y_velocity

- comment :

- Horizontal velocity in the +y direction at the 'v' face of the tracer cell on the native model grid. Note: in the Arakawa-C grid, horizontal velocities are staggered relative to the tracer cells with indexing such that +VVEL(i,j_g,k) corresponds to +y fluxes through the 'v' face of the tracer cell at (i,j,k). Do NOT use VVEL for volume flux calculations because the model's grid cell thicknesses vary with time (z* coordinates); use VVELMASS instead. Also, the model +y direction does not necessarily correspond to the geographical north-south direction because the x and y axes of the model's curvilinear lat-lon-cap (llc) grid have arbitrary orientations which vary within and across tiles. See EVEL and NVEL for zonal and meridional velocity.

- valid_min :

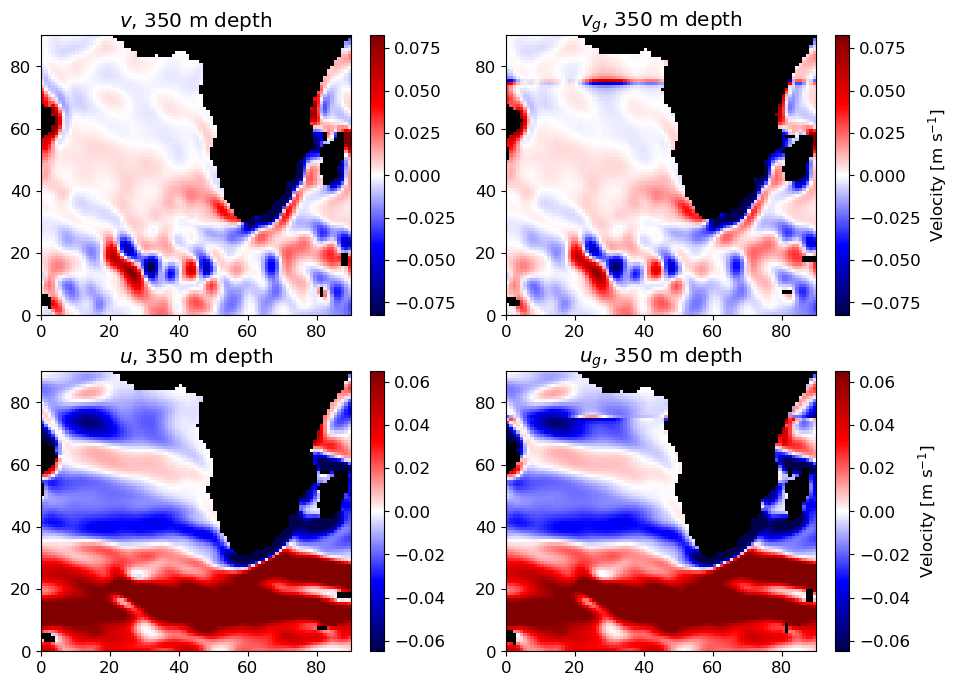

- -1.2608345746994019